In a world where car accidents are the among the most common form of damage and injury, it’s no wonder that people want to quickly collect and submit evidence in case anything goes wrong. Yet auto insurance claims remain a complex and tedious process that relies on a lot of manual steps, human inputs and subjective determinations.

One company, in particular, has devised an innovative way for simplifying this process: Autonet. Autonet has invented novel methods to identify the orientation of cars in pictures submitted by the owners, which allows a computer vision model to help identify the precise location of damages to a vehicle.

This adds valuable information that can be used to automate, or at least help a human determine, what decision and cost model to use. It also makes the submission of evidence much easier, as it’s all done via a built-in camera and customer app.

Image from Autonet

Creating such a model, however, requires a lot of carefully labeled images. To get started, the team used an open-source dataset of 8,000 car images from the Stanford AI Lab cars dataset and hand-labeled an initial 1,200 images. They then used Python to perform image augmentation in the form of selective cropping and horizontal flipping. This ground truth dataset was used to train a custom auto-label model within the Superb AI platform with the goal of automatically labeling a much larger dataset, and creating a classifier that can group images into one of eight compass directions.

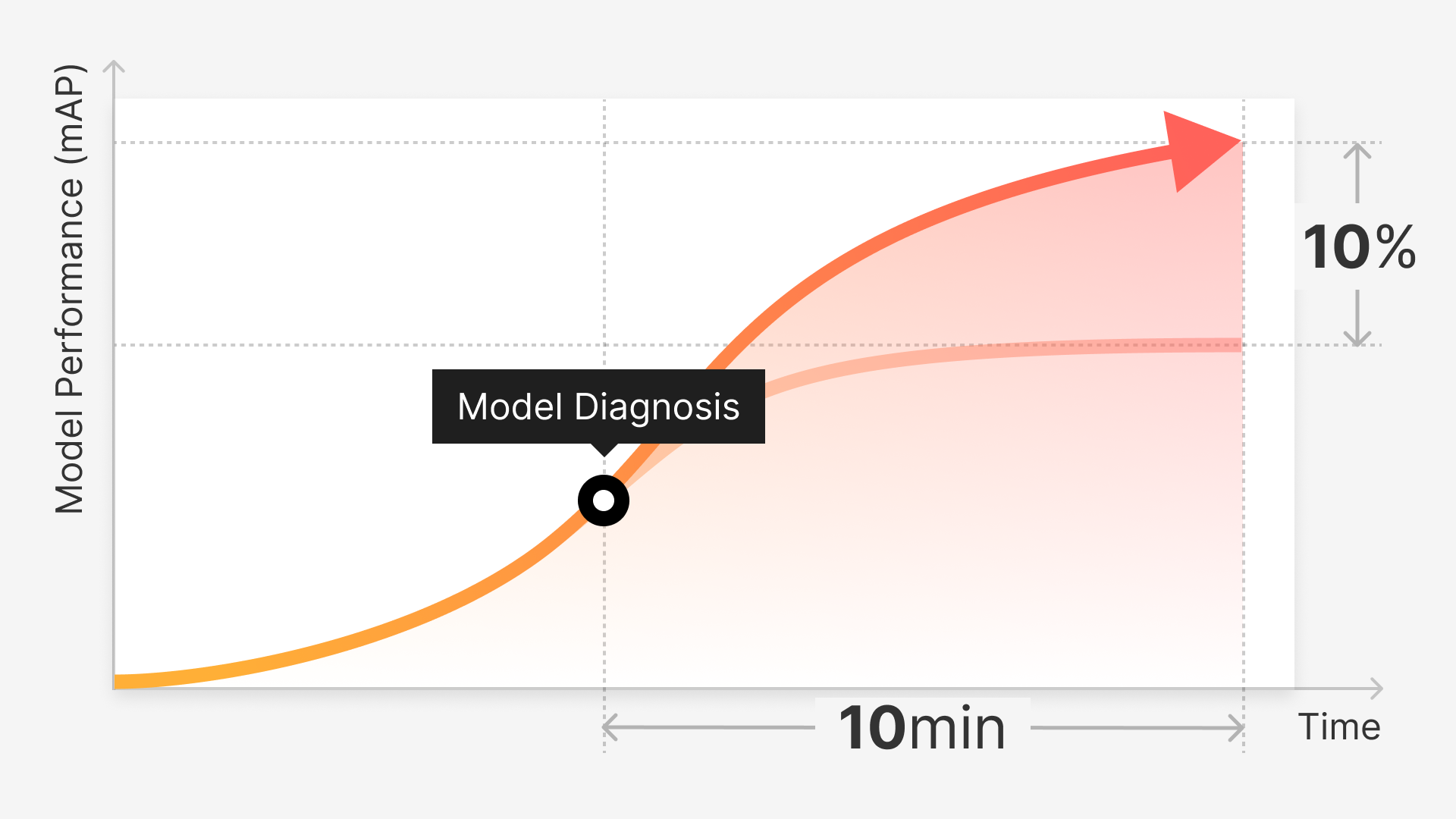

Simultaneously, they built a custom model in Tensorflow/Keras and trained it using the same ground truth dataset. Through careful testing, analysis, and optimization using a validation set, they found that the Superb AI auto-labeler performed much better than the Keras model, with test set results showing over a 90 percent accuracy rate. In this case, 0.92 overall scoring across sections (front, right_rear, etc.) compared to 0.81 for the Keras model.

As an example, they auto labeled another 500 images, reviewed them, and used the combined data to train a new, updated custom auto-label. Within this test, only a few of the 500 images needed any changes at all. After retraining, they gained a further two percentage points in overall accuracy, translating into a five percentage point gain on the test set, which was the same as used previously, after less than two hours of work (including training time). According to Autonet, this translated to a "good return on time investment for most AI projects."

To learn more about Autonet’s experience augmenting manual labeling, as well as all the details about how they built their custom model and performed their experiment, get the full story here.